It's Not Your Engine. It's Your List.

Clean, context-rich PEP control lists are the only scalable way to cut false positives.

It's Not Your Engine. It's Your List.

Clean, context-rich PEP control lists are the only scalable way to cut false positives.

Real talk: your name isn't unique

Unless you're literally named "X Æ A-Xii" (Elon Musk's child's name), your name isn't unique. Screening engines see millions of name look-alikes; that's why you need other identifiers - DOB, jurisdiction, role dates, addresses, and well-structured aliases - to triangulate who you actually are and cut false positives.

TL;DR

Upgrade to clean, context-rich, time-bounded PEP data and watch false positives drop - without torturing thresholds.

Engines are smart—lists must be smarter

Modern screening stacks blend fuzzy matching, phonetics, transliteration, nickname mapping, and name-order logic to estimate match probability. What they can't do is invent context your list doesn't supply.

- No DOB? Larger candidate sets and more noise.

- No jurisdictions? More cross-border overlaps.

- No role dates? Yesterday's official pollutes today's matches.

Control list quality > endless retuning.

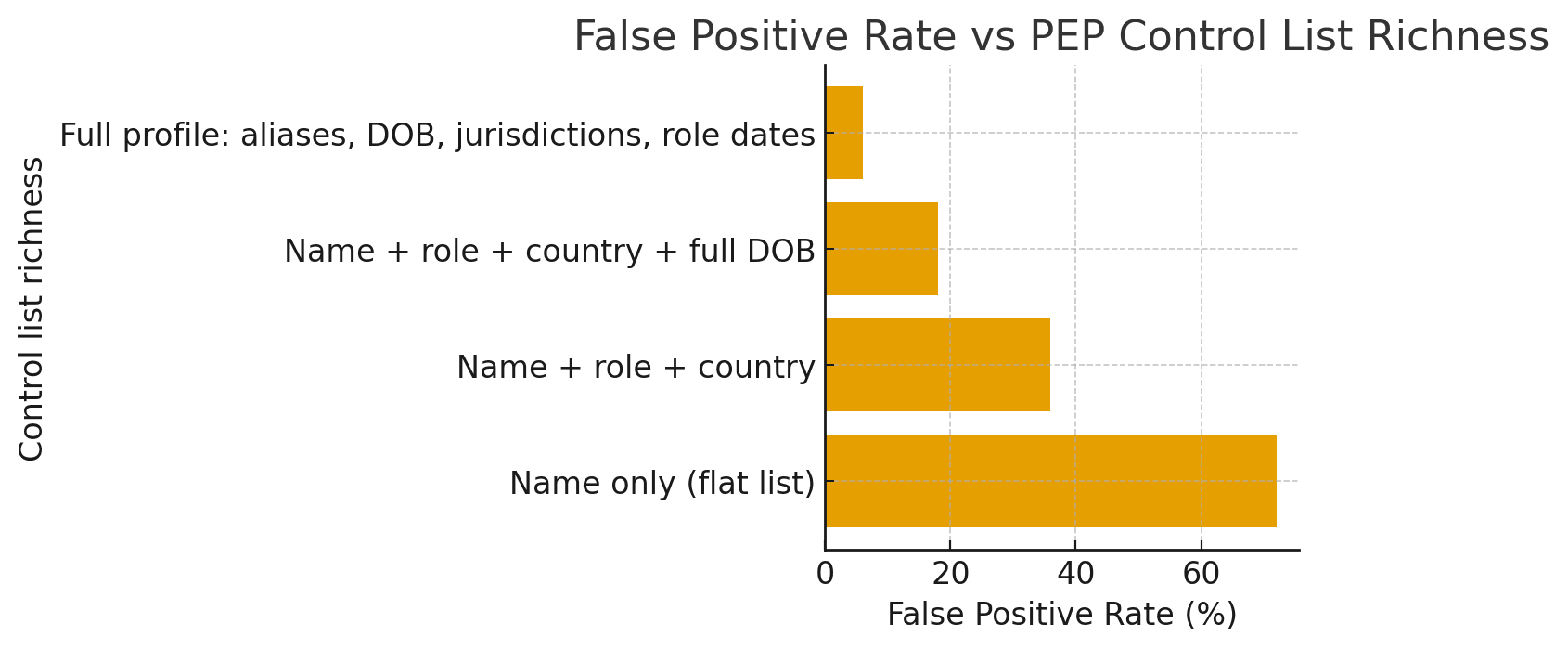

False Positive Rate vs PEP Control List Richness

Richer PEP records (DOB, role dates, jurisdictions, aliases) shrink the candidate set before scoring.

Reality check: when lists are garbage, FPR can hit 100%

We've seen programs where the upstream PEP source was so poor that false positives hit 100% - every single alert was junk. That's not an engine problem; that's a list-quality problem.

Key point: Name-only, undated roles, missing jurisdictions, and absent DOBs create candidate sets so broad that precision collapses. No amount of threshold tweaking fixes missing context.

Regulatory reality: you can't turn off PEP screening—so do this

- Upgrade the control list: full DOBs, role start/end dates, role jurisdictions, structured aliases, RCAs, and source lineage.

- Time-bound roles: explicitly retire signals when a term ends to avoid "forever PEP" matches.

- Boost precise matches: give higher weight to role-dated + DOB-confirmed candidates; de-emphasize name-only fuzz.

- Delta updates: ingest new appointments quickly and capture exits - yesterday's data shouldn't pollute today.

- Analyst feedback loop: capture dispositions (TP/FP) to refine aliasing and edge-case heuristics in the list.

Bottom line: compliance stays on, noise goes down, analysts focus on real risk.

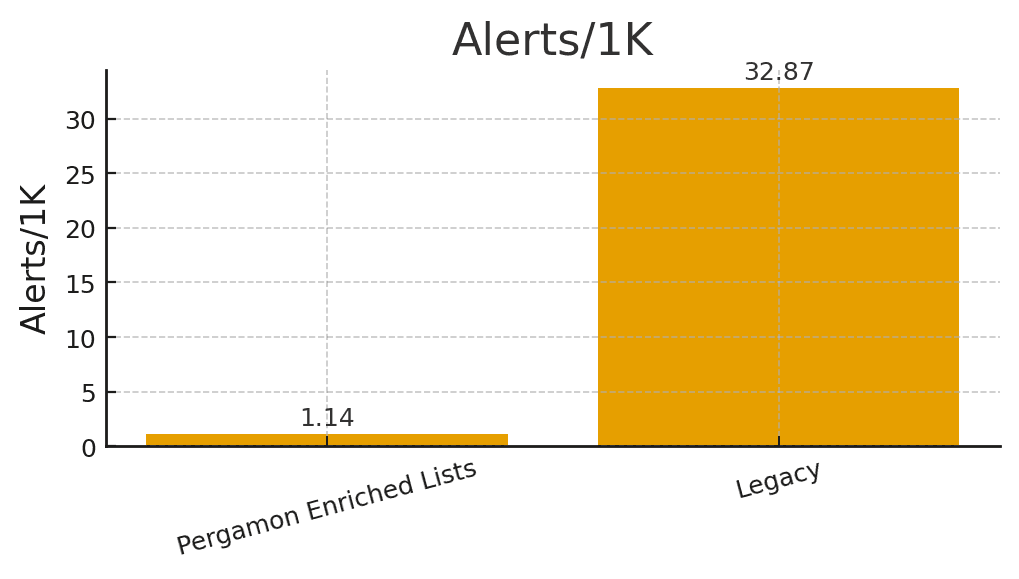

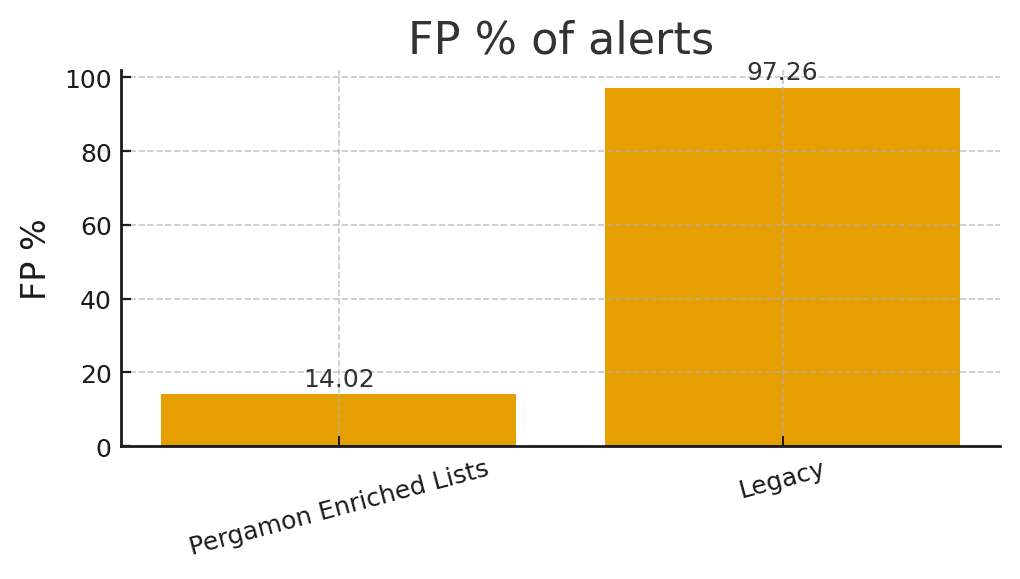

"100 Men vs 1 Gorilla" simulation (100,000 clients with full CIP)

We compared Pergamon Enriched Lists (DOB, role dates, jurisdictions, aliases, sources) to a Legacy name-heavy list (DOB 50%, address 50%, sources 15%). Assumptions: true PEP prevalence 0.1%, non-PEP name-collision baseline 4%, and stricter alerting when context is present.

| Metric | Pergamon Enriched Lists | Legacy |

|---|---|---|

| Alerts per 1K | 1.14 | 32.87 |

| False positives (% of alerts) | 14.0% | 97.3% |

| Precision (TP / Alerts) | 85.98% | 2.74% |

| Recall (TP / True PEPs) | 98.00% | 90.00% |

| TP (of 100 PEPs) | 98.0 | 90.0 |

| FP per 1K | 0.16 | 31.97 |

Data note: Name-collision baselines calibrated using Statistics Canada's 2021 Census first-name frequencies. Higher concentration leads to higher collision risk when DOB and role dates are missing.

Visual Comparison

The difference is stark when visualized:

Math & Validation Appendix

When you have to show the proof and the numbers actually add up.

Simulation math

TPₛ = N · π · rₛ

FPₛ = N · (1 - π) · (p_name-coll · p(alert|collision)ₛ)

Alertsₛ = TPₛ + FPₛ

Precisionₛ = TPₛ / Alertsₛ

FP%ₛ = FPₛ / Alertsₛ

Alerts per 1k = Alertsₛ / (N / 1000)

FP per 1k = FPₛ / (N / 1000)

Sensitivity (name-collision = 2%, 4%, 6%)

| Name-collision | Alerts/1k (Pergamon) | FP % (Pergamon) | Precision (Pergamon) | Alerts/1k (Legacy) | FP % (Legacy) | Precision (Legacy) |

|---|---|---|---|---|---|---|

| 2% | 1.06 | 7.5% | 92.46% | 16.88 | 94.7% | 5.33% |

| 4% | 1.14 | 14.0% | 85.98% | 32.87 | 97.3% | 2.74% |

| 6% | 1.22 | 19.7% | 80.34% | 48.85 | 98.2% | 1.84% |

The Bottom Line

"It's not your screening model. It's your control list." - change my mind.

© 2025 Pergamon Labs - Context-first PEP control lists.